The Hidden Costs of ERP Silos

In 1999, Hershey’s ERP go-live became a cautionary tale. Despite investing in SAP, the company lost an estimated US $100 million in unfulfilled orders and experienced an 8% drop in share price. The issue wasn’t the software’s capability but the lack of cohesive governance. Finance, supply chain, and HR teams customized their modules in isolation, leading to brittle integrations and operational breakdowns.

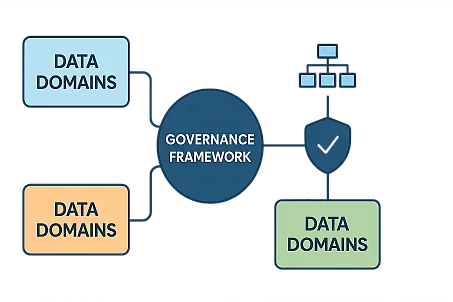

This scenario is far from unique. Many ERP projects struggle because they focus on technological integration while overlooking human and process-related challenges. Without a unified governance framework, even the most advanced ERP systems can exacerbate silos rather than eliminate them.

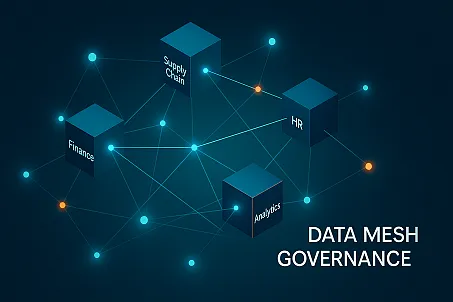

Introducing Data Mesh A New Governance Paradigm

Data Mesh, introduced by Zhamak Dehghani, redefines data ownership by treating it as a product. Domains such as finance, logistics, and HR retain autonomy over their data but adhere to enterprise-wide standards through

- Self-serve data infrastructure – Empowers teams to access and manage data without centralized bottlenecks.

- Federated computational governance – Ensures consistency through shared contracts, service-level agreements (SLAs), and automated policy enforcement.

Unlike traditional ERP models, Data Mesh embeds governance into the data lifecycle, preventing fragmentation while preserving agility.

ERP Pitfalls and Data Mesh Solutions

The following table compares common ERP failures with their Data Mesh counterparts and the governance antidotes that mitigate risks

| Classic ERP Failure | Data Mesh Risk | Governance Solution |

|---|---|---|

| Over-customized modules create brittle integrations | Domains publish inconsistent schemas and quality metrics | Universal product contracts – Standardized SLAs for data lineage, freshness, and privacy. |

| Integration testing is deferred until late in the project | Data products launch without downstream validation | Shift-left contract testing – Validates data products early in the CI/CD pipeline. |

| Training focuses on module features, not end-to-end workflows | Teams optimize locally, ignoring enterprise KPIs | Cross-domain architecture reviews – Aligns initiatives with company-wide objectives. |

| One-off fixes increase maintenance costs | Duplicate datasets proliferate | Central catalog with reuse incentives – Encourages a "build once, share everywhere" culture. |

Case Studies Data Mesh in Action

Early adopters of Data Mesh have demonstrated its potential to transform ERP governance

- ING Bank implemented an eight-week proof-of-concept that enabled domain teams to build self-serve data products on a governed platform. The result was faster time-to-market for insights and improved compliance.

- Intuit found that nearly 50% of data workers’ time was wasted searching for data owners and definitions. By adopting Data Mesh, they reduced discovery friction and created a network effect of reuse across thousands of tables.

These organizations reported shorter validation cycles, lower storage costs, and more transparent audit trails—outcomes that traditional ERP implementations often struggle to achieve.

Four Steps to Mesh-Ready Governance

Implementing Data Mesh governance requires a structured approach. The following four steps provide a framework for success

- Codify the Contract

Publish canonical data models (e.g., customer, invoice, shipment) with versioned SLAs and dashboards visible to all teams. This ensures consistency and transparency.

- Automate Policy as Code

Embed governance directly into CI/CD pipelines. Automate lineage capture, PII masking, and quality gates to eliminate manual errors and accelerate deployments.

- Appoint Integration Champions

Rotate enterprise architects or senior analysts into domain teams to act as diplomats for cross-functional reuse. This breaks down silos and fosters collaboration.

- Measure the Mesh

Track key metrics such as lead time from data request to insight, rework hours saved, and incident resolution speed. Celebrate improvements to the network, not just individual modules.

Executive Takeaways Balancing Autonomy and Cohesion

For executives, the message is clear Domain autonomy without enterprise glue risks recreating ERP silos in a cloud-native environment. To avoid this, treat federated governance as critical infrastructure

- Fund governance initiatives like R&D projects, with dedicated budgets and resources.

- Hold leaders accountable for both local agility and global coherence.

- Invest in tools and training to support automated policy enforcement and cross-domain collaboration.

Action Item At your next executive meeting, audit the three datasets underpinning your highest-stakes initiatives. If any lack a named owner, published contract, or automated enforcement, prioritize governance investments to prevent fragmentation.

Figure 1: Hierarchical and Cyclical Relationships of Data Governance, MDM, Data Quality, and Metadata Management in ERP Systems

Figure 1: Hierarchical and Cyclical Relationships of Data Governance, MDM, Data Quality, and Metadata Management in ERP Systems Figure 2: ERP Governance ROI, Cost of Poor Data, Case Study Comparison, and Automation Benefits

Figure 2: ERP Governance ROI, Cost of Poor Data, Case Study Comparison, and Automation Benefits Figure 3: Five-Level ERP Data Governance Maturity Model and Capability Assessment

Figure 3: Five-Level ERP Data Governance Maturity Model and Capability Assessment Figure 4: 24-Month Roadmap, Success Metrics, Technology Decision Matrix, Change Management, and Risk Mitigation

Figure 4: 24-Month Roadmap, Success Metrics, Technology Decision Matrix, Change Management, and Risk Mitigation